Zhe Zhang

Fusing Wearable IMUs with Multi-View Images for Human Pose Estimation: A Geometric Approach

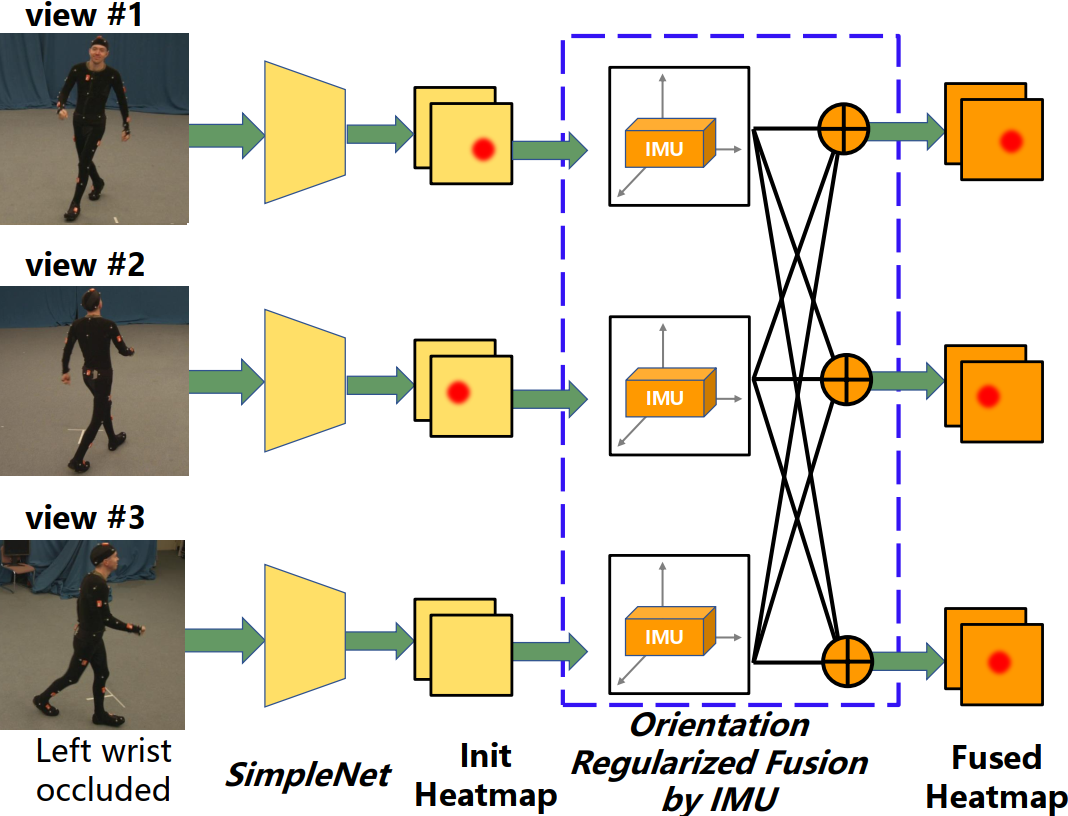

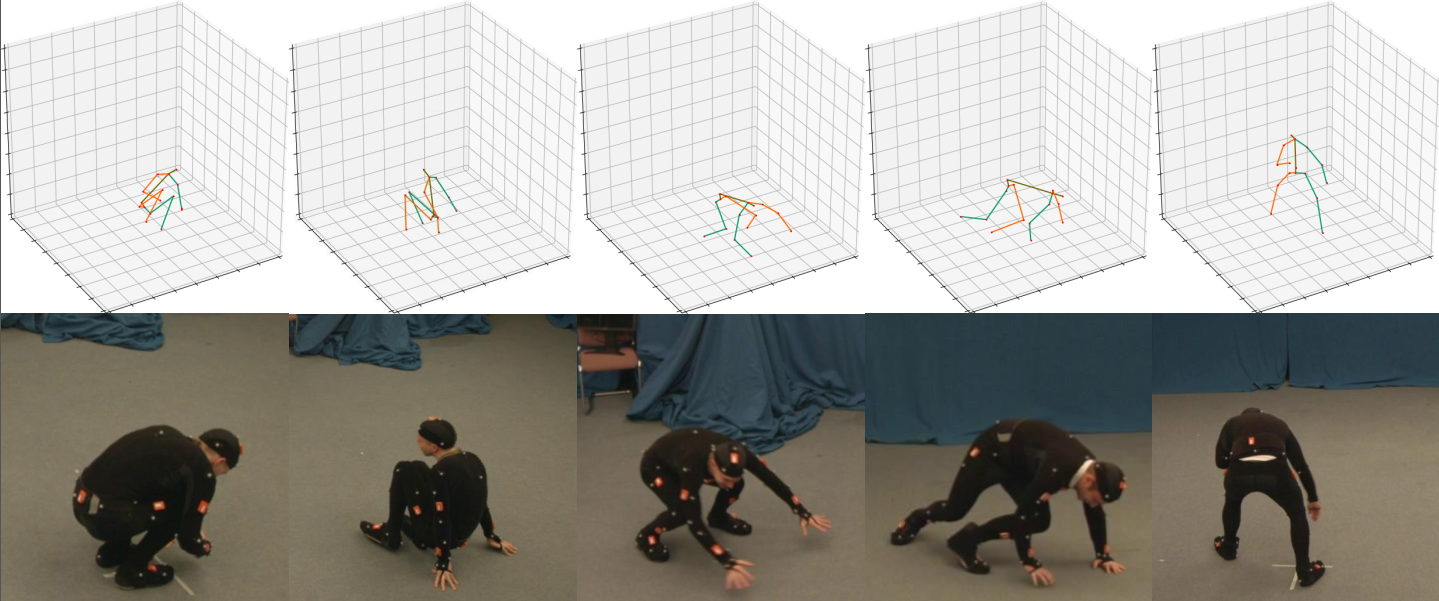

Abstract We propose to estimate 3D human pose from multi-view images and a few IMUs attached at person’s limbs. It operates by firstly detecting 2D poses from the two signals, and then lifting them to the 3D space. We present a geometric approach to reinforce the visual features of each pair of joints based on the IMUs. This notably improves 2D pose estimation accuracy especially when one joint is occluded. We call this approach Orientation Regularized Network (ORN). Then we lift the multi-view 2D poses to the 3D space by an Orientation Regularized Pictorial Structure Model (ORPSM) which jointly minimizes the projection error between the 3D and 2D poses, along with the discrepancy between the 3D pose and IMU orientations. The simple two-step approach reduces the error of the state-of-the-art by a large margin on a public dataset.

Demo Video

Code

- Paper: arXiv

- Code: {aka.ms/imu-human-pose} {Microsoft Official Github (Not yet available)}

- TotalCapture Dataset Toolbox: zhezh/TotalCapture-Toolbox

- Poster: PDF

- CVPR Virtual Meeting Online Materials:

BibTeX

@inproceedings{zhe2020fusingimu,

title={Fusing Wearable IMUs with Multi-View Images for Human Pose Estimation: A Geometric Approach},

author={Zhang, Zhe and Wang, Chunyu and Qin, Wenhu and Zeng, Wenjun},

booktitle = {CVPR},

year={2020}

}